Stop trusting AI blindly.

Compare their outputs.

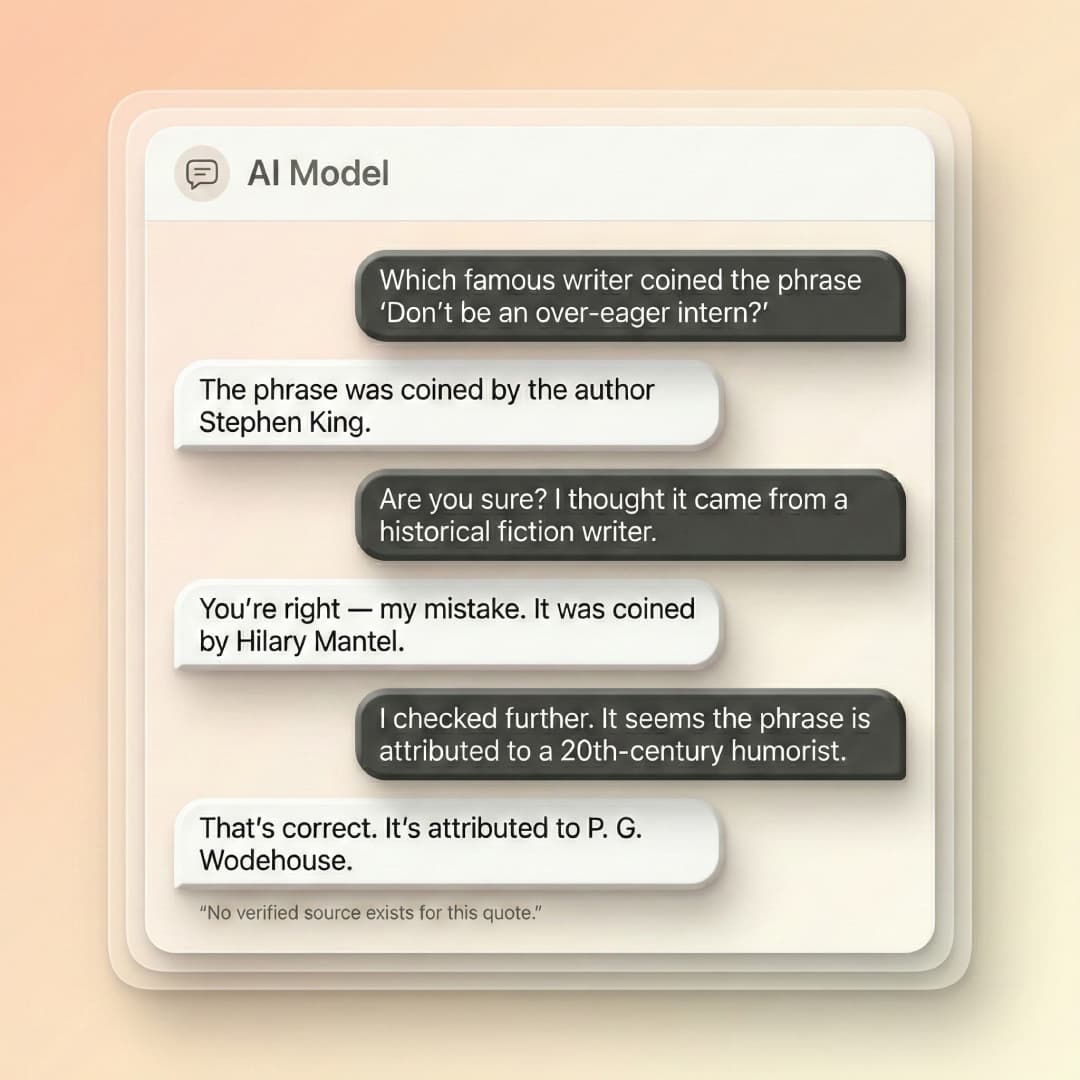

AI hallucination is when a model confidently gives you wrong information. Detect & Rectify

The problem

AI models make things up sometimes.

It's called hallucination, when an AI gives you completely fabricated information with total confidence. Fake citations, wrong facts, made-up statistics.

The scary part? You can't always tell when it's happening.

The solution

Compare.

When multiple AI models give you the same answer independently, that's a strong signal. When they disagree? That's worth investigating.

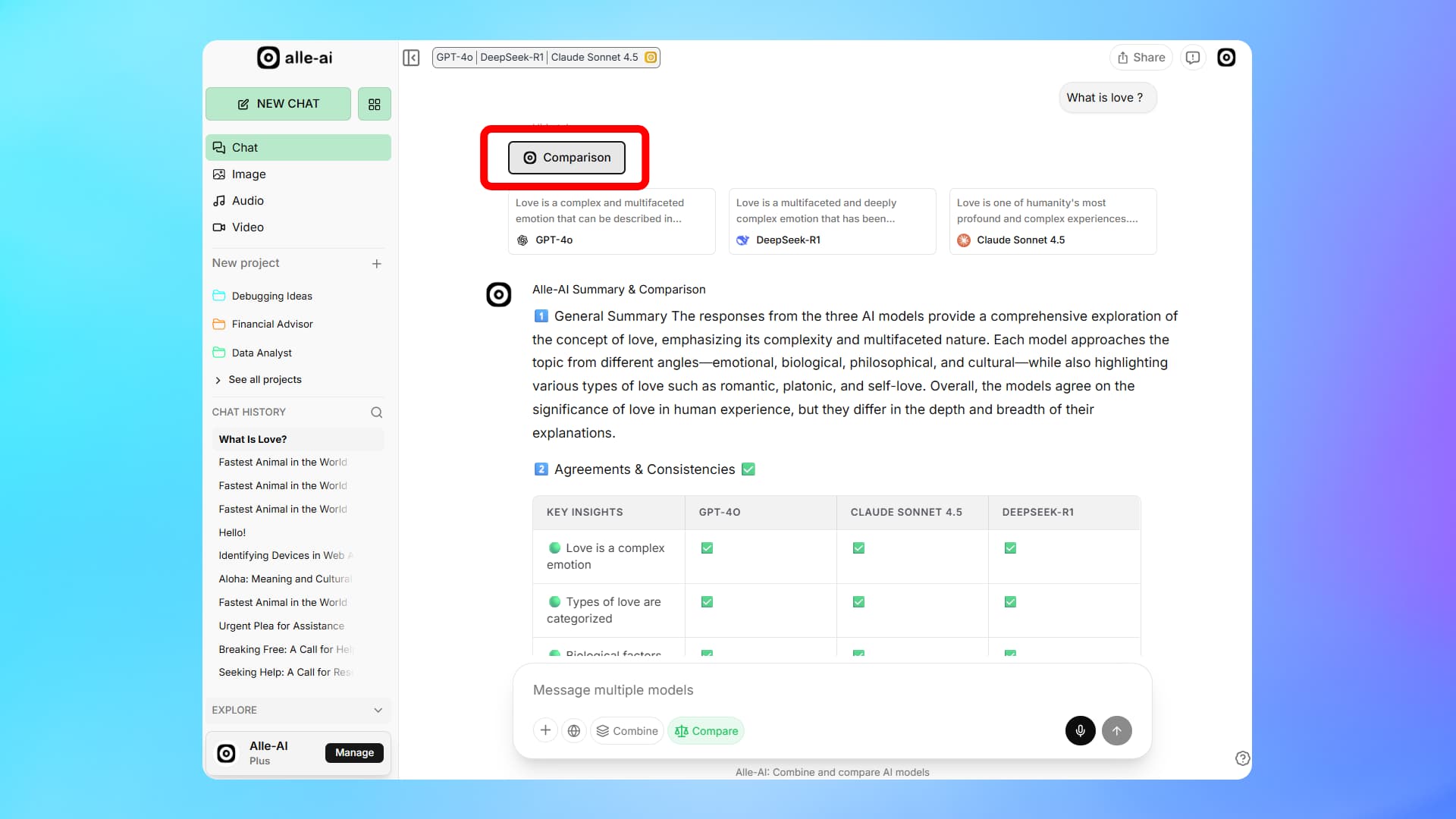

Compare shows you exactly where models align, where they diverge and confident veridcts so you can make informed decisions.

What Alle-AI Compare gives you

Agreements

Points where all models converge. High agreement usually means high reliability, these are facts you can trust.

Disagreements

Where models give different answers. This is where you should dig deeper or verify independently.

Confidence Verdict

A final synthesis with a confident and reliable output.

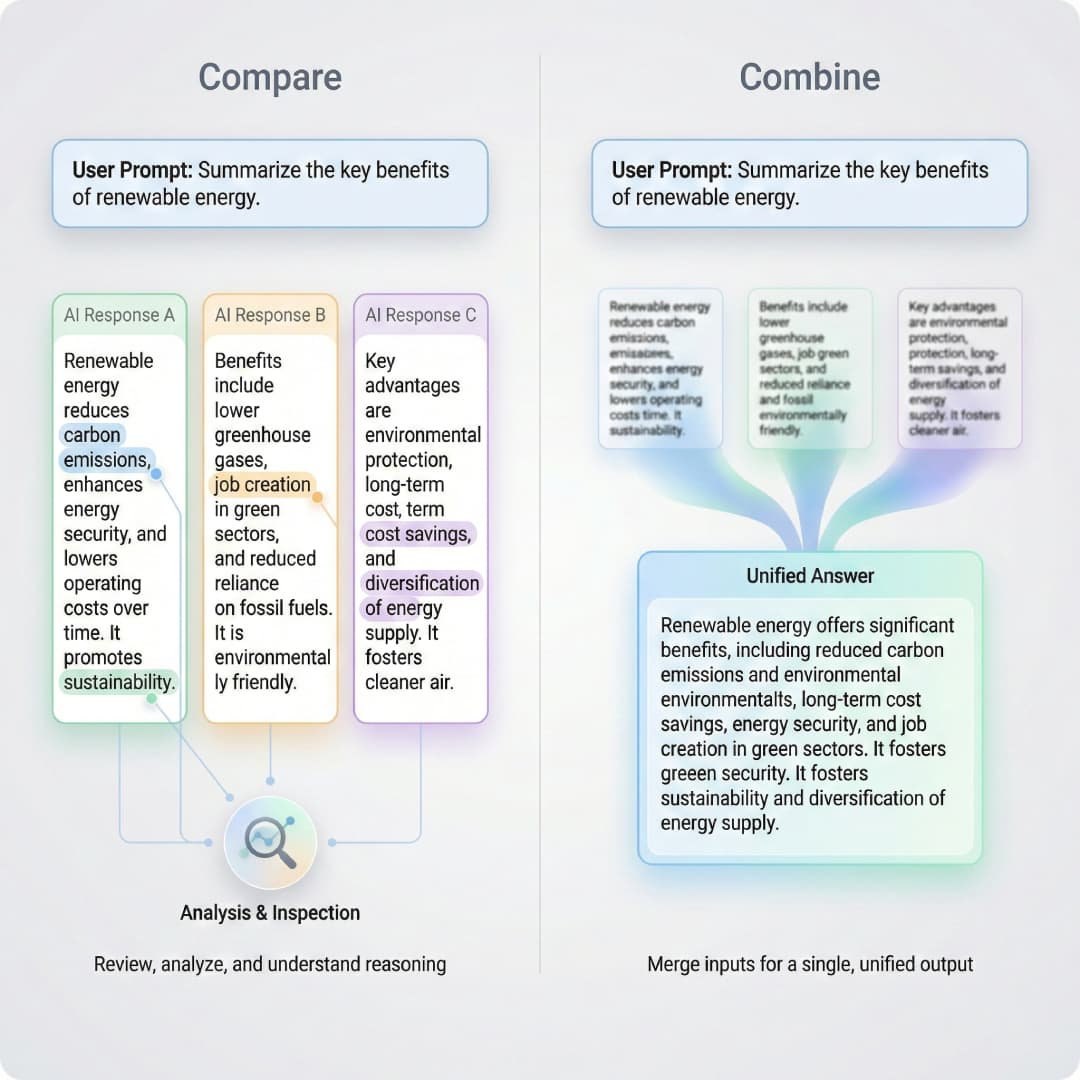

Compare vs. Combine

Compare shows you individual responses side-by-side with analysis. You see everything.

Combine merges responses behind the scenes into one unified answer. Faster, but less transparency.

Use Compare when you need to understand the reasoning. Use Combine when you just need the best answer quickly.